Students’ autonomous robot project could be a lifesaver

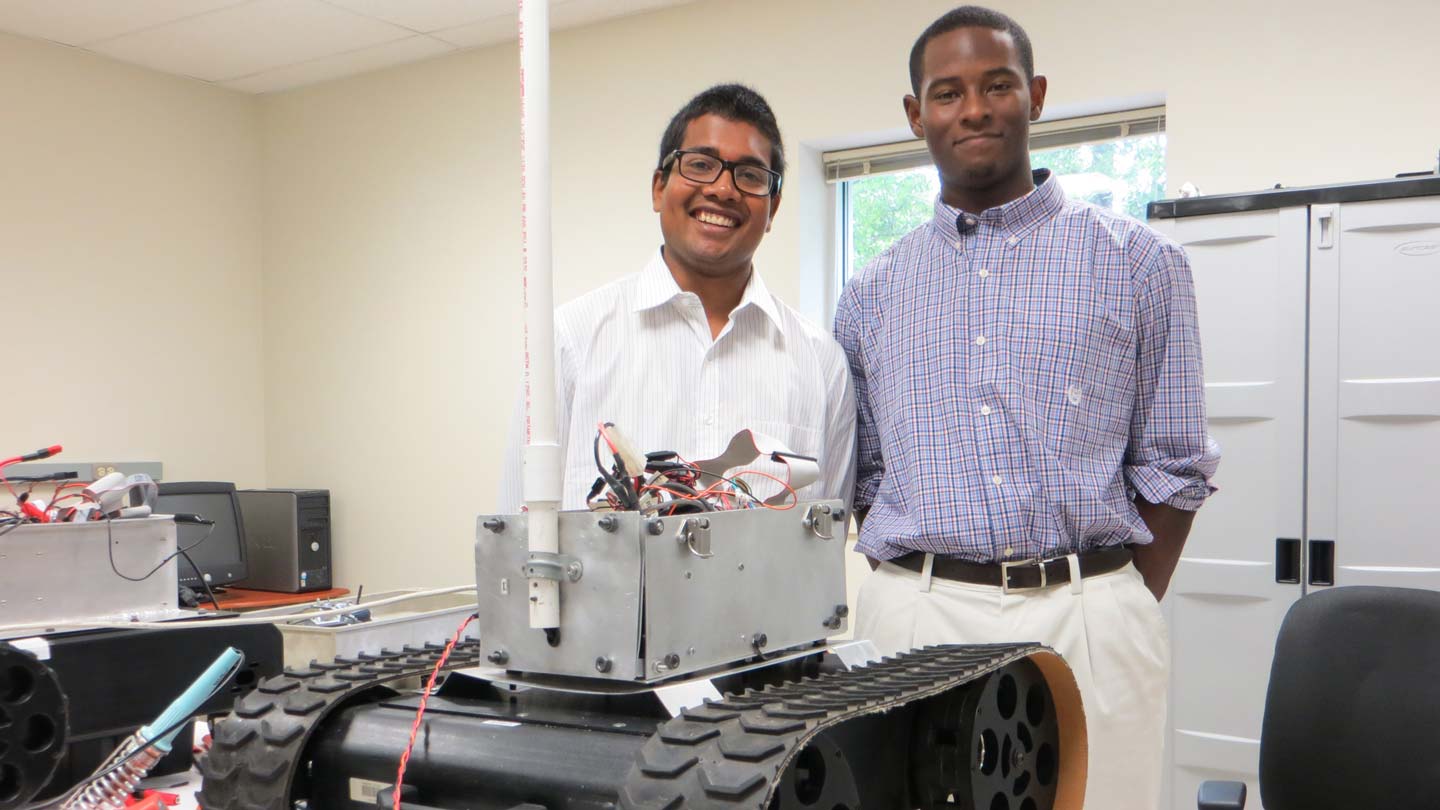

Mechanical and Aerospace Engineering graduate student Sai susheel praneeth Kode, left, and his research assistant, rising sophomore Computer Engineering major Tevon Walker, with the robot they use for research. The box atop the machine is stuffed with an onboard computer and an Inertial Measurement Unit navigation sensor. At left is the white Ethernet cable used to link the robot to a desktop computer for task programming. The robot runs 30-40 minutes on battery power.

Jim Steele | UAH

HUNTSVILLE, Ala. (July 29, 2014) - The building is on fire but the firefighters are unsure about what's fueling it or how hazardous the situation is. They place a robot at the entrance and program in a rudimentary set of directions using a building map or even the recall of an occupant.

The robot starts into the building but discovers a discrepancy between the mapped coordinates and the actual layout. Game over? Not if the current experimental work of two University of Alabama in Huntsville (UAH) students produces fruit.

Advised by Mechanical and Aerospace Engineering assistant professor Dr. Farbod Fahimi, whose guidance they say has been invaluable, graduate student Sai susheel praneeth Kode and his research assistant, undergraduate Computer Engineering major Tevon Walker, are exploring ways to create an electronic "neural network" between the robot and the operator that will help empower both by releasing the robot to perform tasks assigned by the controller within certain parameters.

The autonomous robot, as they call it, would be able to detect and act on anomalies between what the operator has told it is the path to its destination and the actual conditions it finds. It would relay that information to the operations base via the network and adjust to complete its mission. That goal is still on the research horizon for the pair.

It tries to figure out what you are telling it. And since we are working on a learning mathematical model, it will work with any number of robotic configurations.

Sai susheel praneeth Kode

Graduate student

Mechanical and Aerospace Engineering

Walker, who as a rising sophomore says he is "very fortunate" to work on such high-level robotics at UAH, says right now the pair can control the robot with a set of directions.

"Right now, we can give it the inputs and it executes it, but it doesn't know how accurate it is," Walker says. "When we add the autonomous learning control, it will allow it to automatically adjust for errors."

The robot is equipped with an onboard computer, a solid-state drive and an Inertial Measurement Unit navigation sensor. The sensor can detect its heading, pitch and roll, GPS position, velocity and acceleration rate.

"We program the paths into it using an Ethernet cord - straight line, circle or whatever pathway we want it to take," Kode says. "Once we set it down, the GPS figures out its position and the computer and the learning algorithm execute the path."

A neural network would delink the cause-and-effect relationship between the operator's exact coordinates and the robot, in essence untethering it to work within certain parameters to learn how to move on its own while still remaining under the operator's control.

"There's no direct mathematical correlation between the robot and the control computer in what we are trying to do," says Kode, who for his thesis is working on the mathematical computations necessary for the software needed so the robot can "decide" about discrepancies between its directions and what it actually finds. "We are doing a number of tests to determine what this relationship is. Whatever we program to this robot, we then take it outside so that we can analyze how it is doing relative to what we want. When we match the robot and the computer through the neural network, then it will be possible."

For the robot, the neural network will "bridge the gap between the input and output signals," Walker says. Using differential equations, the programming will allow the robot to "tell you what it is doing."

"It tries to figure out what you are telling it," says Kode. "And since we are working on a learning mathematical model, it will work with any number of robotic configurations."

Untethering a remotely controlled robot from direct operational cause-and-effect could open new worlds when it comes to accessibility for operators and allow the robot to do its task even when faced with unforeseen obstacles, the students say. In a perfected system, the device could move out of the laboratory and the hands of specialized researchers and into the hands of rescuers like that firefighter, who could operate it with rudimentary training. Once there, it could save lives.

For more information, contact:

Dr. Farbod Fahimi

256.824.5671

farbod.fahimi@uah.edu

OR

Jim Steele

256.824.2772

jim.steele@uah.edu