Immersion Integrations Research Center student researchers and scientist, incl. Christopher Bero; ARL Scientist, Dr. Jeff Hansberger; Christopher Kaufman; Sarah Meacham; and Victoria Blakely.

Michael Mercier | UAH

The Immersive Integration Systems Center in Olin B. King Technology Hall was assembled in 2013 as a research partnership with the U.S. Army Research Laboratory (ARL) and is part of their Open Campus initiative that encourages advances in research areas of relevance to the Army. The Center is comprised of the Manned/Unmanned Collaborative Systems Integration (M/UCSI) Laboratory and the Human Interface Innovation (H2I) Laboratory.

“We’ve designed the center so any of our students, including internationals students, can work there and answer research questions,” says ISEEM Department Chair, Dr. Paul Collopy. “Additionally, we have several separately-funded ISEEM-specific projects that keep the labs busy and useful.”

Among the basic equipment found in the center is a set of powerful game-capable computers that run a military simulation training program called Virtual Battlespace 3. “In the lab, we can simulate operator experiences such as a pilot operating a predator drone,” says Dr. Collopy.

According to Dr. Thomas Davis, chief of ARL’s Air and C2 Systems Branch at Redstone Arsenal, researchers are seeking to identify aspects that benefit the soldier and those that could potentially hinder their mission.

Overall, much of the lab research is centered on studying the collaboration between people and autonomous systems. “The ARL scientists in our lab are leading some very interesting research projects,” says Dr. Collopy.

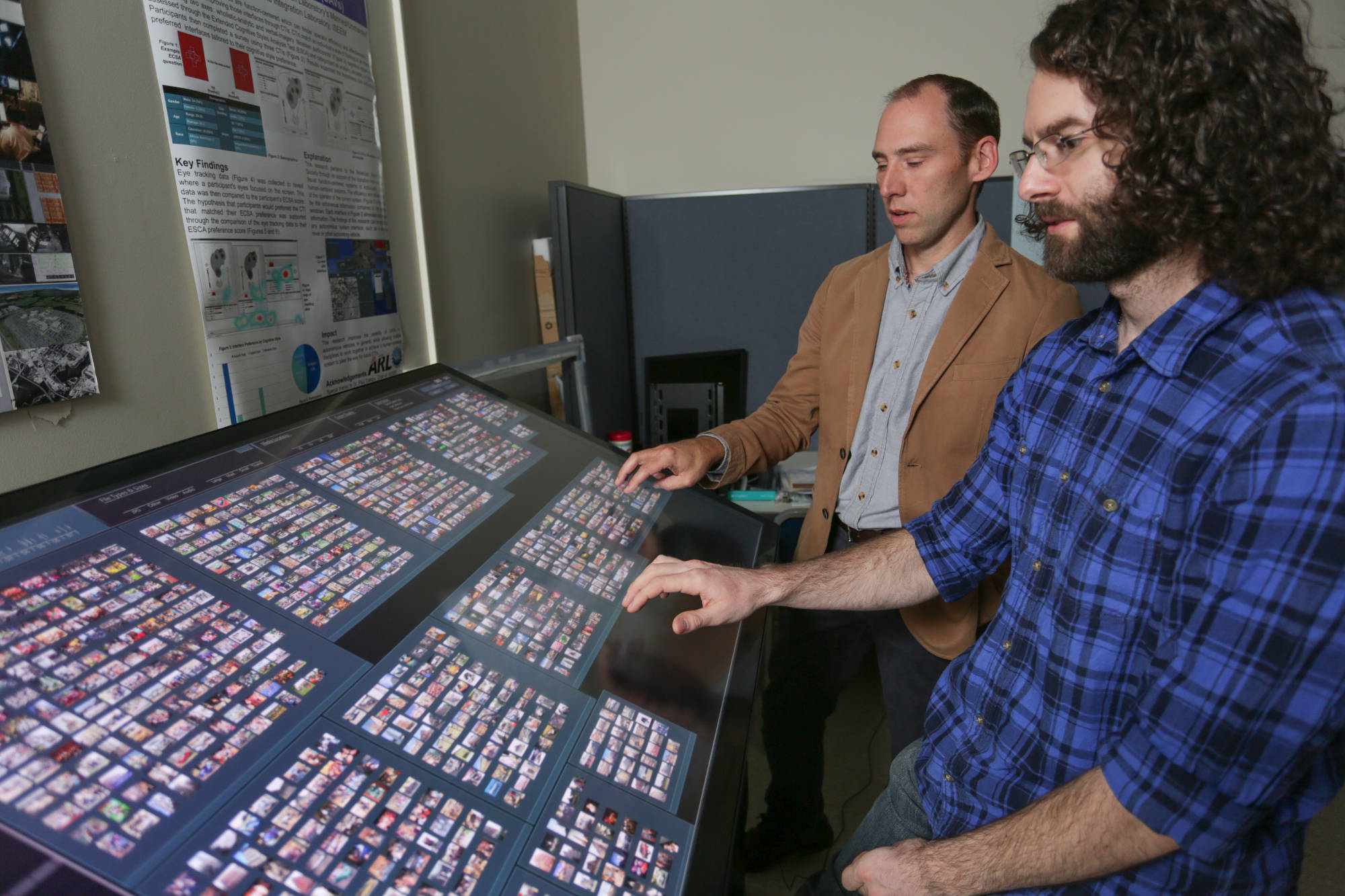

Dr. Jeff Hansberger from ARL is beginning a new research effort in the area of human-computer interaction and interface design. He is working with others from the H2I Lab to design and prototype new ways to both visualize and interact with information for intelligence analysts.

“The amount of visual information like photographs and video data available for inspection is growing at ever increasing rates,” says Dr. Hansberger. “The one thing that information consumes is the user’s attention, which we are all in short supply of. It’s our job to design and craft the information to maximize the user’s time, attention, and enhance their performance.”

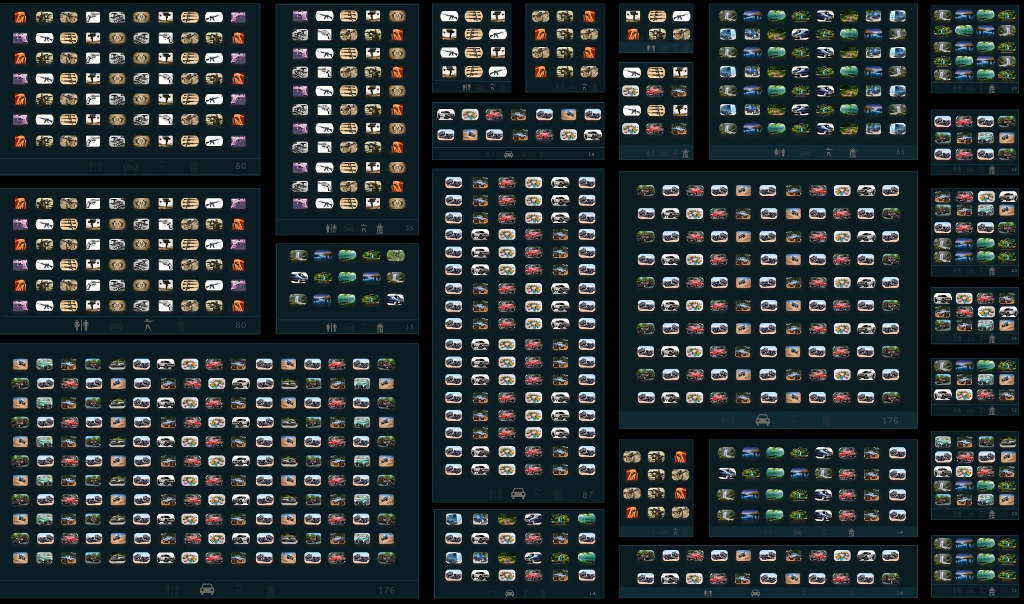

The image collection interface developed by Dr. Hansberger provides a visual landscape of all the images within a collection so the user can view the number and size of the clusters identified by the system.

Dr. Hansberger | UAH

Hansberger and his team of students and researchers from psychology and multiple engineering disciplines are focusing on more direct and natural means of interacting with information. They are making use of the same motion capture technology that movie makers use, but instead of creating characters on the big screen, they are tracking the user’s hand and arm movements for gesture-based input to the interface (Think “Tony Stark” from the Iron Man movies). Instead of limiting the user’s actions by sliding a mouse around in two dimensions, the interface allows users the full range of their hands to manipulate information in the same way they manipulate everyday objects.

In addition to news ways to visualize and interact with information, the H2I Lab is investigating ways to augment the user’s performance. Computer vision algorithms provide computer aided search and filtering for objects embedded within photographs. Research is also focused on augmenting the user’s cognitive capabilities, such as their ability to identify visual targets in a large collection of images and improve their vigilance and attention. “Ultimately, we’re trying to put more effort into the science and design of the information so the user can put less effort into consuming the information and more into making sense of the information,” says Dr. Hansberger.

Dr. Jeff Hansberger, and Christopher Kaufman

Michael Mercier | UAH